Serverless is another approach to cloud computing. It’s an automatically provisioned environment for running code. It’s even more fine-grained than microservices and just gaining traction as a standard cloud offering. What is serverless capable of doing and good for? It should handle anything that a regular VM can. But would it be practical to run a website on it? Let’s find out by running an Express server on AWS Lambda, which is currently one of the biggest serverless platforms.

Please keep in mind that, while most of the information below is still current, the following test is something I performed back in October 2017.

There are technical, organizational and business reasons to try Express with a rich JavaScript framework on Lambda. Lambda is the epitome of the pay-as-you-go model, virtualization and scalability. By looking at this article it’s easy to see why it can be cheaper to use but serverless and front-ends are inherently scalable as well. Having the whole infrastructure managed by Amazon feels great. It encourages optimizing code for performance and horizontal scaling for cost savings, which has a positive side effect of making code faster. Knowing how Lambdas are provisioned is still a must just like with regular servers. Because new instances are regularly booted up and old ones removed, knowing how to influence the process is very valuable. The time saved administering servers is shifted towards writing faster code and hacking auto provisioning to one’s advantage. Technically, AWS Lambda is still changing and improving. It may be too early for commercial use but it’s doubtful the concept of serverless will die.

Initially, serving any website on AWS Lambda sounds like a bad idea. Lambdas are supposed to be optimized and single-purpose pieces of code, mainly for processing data and not serving content. However, putting a website on Lambda is actually pretty easy. A while ago, AWS’s API Gateway started supporting HTTP responses entirely generated by the Lambda’s code which opened Lambda up for various new use cases. Their headers and bodies can now be anything, including HTML, which is a must to properly setup a website. Serving a basic page is now as easy as returning its HTML and telling the caller the response’s Content-Type is text/html.

But for anything more complex, chances are a JavaScript framework will be used instead. How much work is involved in adapting, say, Ember to work on Lambda? Ember can be comprised of many related components and those components of many assets, such as CSS and images. If FastBoot is added on top of that, which is Ember’s server-side rendering library, the server will be used to pre-render the website also. Using an alternative to Express as the server would be possible, but not worth it.

Architecture

For the test, Ember 2.15.1 was used with FastBoot 1.0.5. under NodeJS 6.10. The vast majority of development was done locally using Docker running a node image as the test environment. First, code was ran without FastBoot and later with it but by attaching FastBoot middleware to Express, which is a more involved approach to using FastBoot. Why this was required to run Ember on Lambda is explained later.

Due to Lambda’s ephemeral nature, Express can’t constantly run and listen for requests. This responsibility had to be taken over by API Gateway and Express had to be adapted to answer Gateway’s requests. More on this later as well.

Development Process And Solutions

The viability of using Lambda for websites was tested with a very simple Ember project having few subpages, assets and calling a few endpoints. All of the endpoints were other Lambdas, which are easy to call if they’re within the same region and network using Amazon’s aws-sdk npm module. The permissions for calling Lambdas can be generated and applied on AWS’s IAM page. There’s no coding experience required to do that, just clicking around and choosing the right permissions.

Developing the whole solution was easy. Writing source code was done locally and code specific to running Express on Lambda was separate and completely independent of Ember. What is specific to Lambda is mostly available on GitHub already. There’s an article about running Express on AWS, which explains everything. The GitHub repo referenced in the article is what was used for the test. How the GitHub library works in practice, is it converts an API Gateway request into an Express-compatible request instead and runs a HTTP server using Node.js’s HTTP module with rules from the Express app. The server lasts until AWS decides to remove the Lambda instance it’s running on. It can be reused for multiple requests spread out in time. This is why, at least while idling, Lambdas can handle requests just as fast as regular VMs.

This is how the code for running Express on Lambda looks like in its entirety:

const awsServerlessExpress = require('aws-serverless-express')

const app = require('./app')

const server = awsServerlessExpress.createServer(app)

exports.handler = (event, context) => awsServerlessExpress.proxy(server, event, context)

exports.handler = (event, context) => awsServerlessExpress.proxy(server, event, context)

The app module is a configured Express instance:

const fastbootMiddleware = require('fastboot-express-middleware')

const express = require('express')

const app = express()

const path = require('path')

const bucketName = 's3-unique-bucket-name'

app.all('/assets/*', (req, res) => {

res.redirect(301, `https://s3-eu-west-1.amazonaws.com/${bucketName}${req.path}`)

})

app.all('/robots.txt', (req, res) => {

res.sendFile(path.join(__dirname, 'dist/robots.txt'))

})

app.all('/*', fastbootMiddleware('dist')

module.exports = app

And because FastBoot needs to function as middleware and the Express instance be called by a special server ready to consume Lambda requests, the fastboot-express-middleware package was used instead of the simpler fastboot-app-server.

Solving Issues With URLs And Ember Routing

Absolute URLs in Ember don’t work out of the box on AWS Lambda using API Gateway. That’s because the server path to Express on AWS is preceded by a few subpaths — at least one for the API Gateway deployment environment name and more if extra top-level resources are added for the Gateway. For example, https://ba127812.execute-api.eu-west-1.amazonaws.com/prod/express-server/assets/website-bfd7ca6b1f86605d0f0aff31582f75bc.css will become https://ba127812.execute-api.eu-west-1.amazonaws.com/assets/website-bfd7ca6b1f86605d0f0aff31582f75bc.css due to the use of absolute paths in source code. This effectively means Ember assets won’t load and routing anywhere using the mechanism — History API, will remove API Gateway subpaths and route incorrectly by including the subpath as part of Ember’s routes. The easiest solution here is to simply use API Gateway with a registered domain with no subpaths. It can be achieved by configuring AWS to handle the domain or by adding a reverse proxy (possibly nginx) to relay requests for the domain to API Gateway (the latter solution was used in for the test). Switching to another domain won’t require a rebuild of the Ember app, it will just work correctly.

Routing With Subpaths

However, if the goal is to use the API Gateway’s default URL for the Lambda, it’s possible to reconfigure Ember to inject the subpath during build and use it during runtime. There are two important options to focus on: EmberApp’s fingerprint.prepend in /ember-cli.build.js and rootURL in /config/environment.js. Only the second one is needed for API Gateway. It must contain the subpath. For example:

if (environment === 'production') {

ENV.rootURL = '/prod/express-server/'

}

This setting will be enough for Ember to prepend paths to assets and make the router always prepend the subpath when routing. rootURL will be applied during ember build -prod, so unfortunately the domain can’t be changed during runtime which may force a rebuild with a different rootURL once the website is served under a different subpath (or no subpath at all). Absolute addresses inside CSS won’t be adjusted the same way, so they need to be manually changed to relative paths (which was done for the test). Using a SASS function for generating correct URLs may be the best option if absolute URLs are preferred. However, it may be difficult to do so if assets are served from multiple locations.

ember-cli-sass has a way of adding SCSS files to the build. It can be used to serve a variable with rootURL to other SCSS files, so they can again use absolute URLs instead of relative ones.

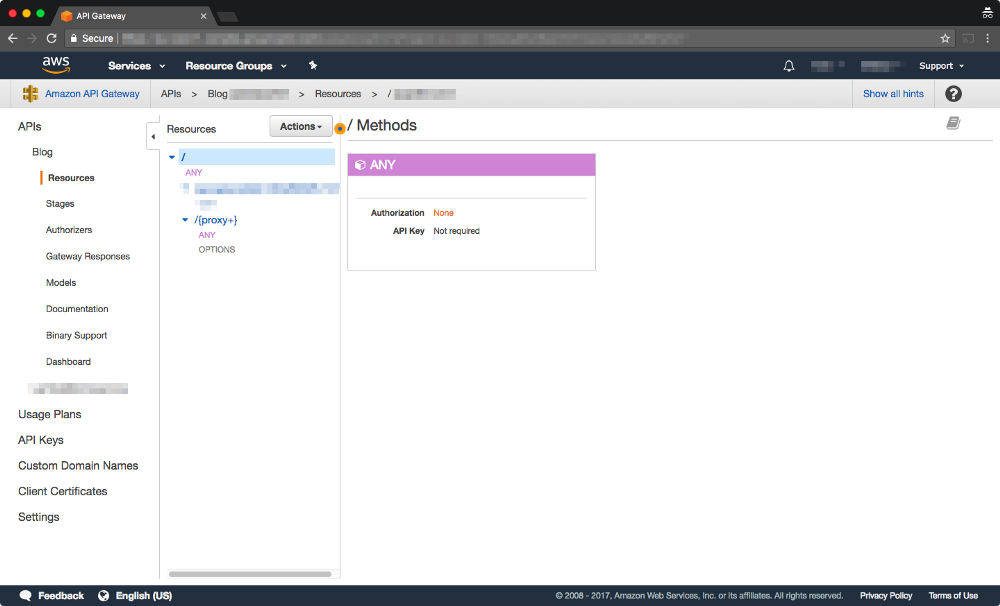

With the exception of the subpath issue, making Gateway send all routes under the website URL was straightforward. Gateway allows creating a proxy resource which is a wildcard URL portion and can be handled by Express, no matter its value:

Speed

It’s a little bit noticeable that a lambda function is serving an Ember website, even when the Lambda is hot and waiting for calls. After going over 512MB of memory the performance improvement of the simple website was very minor. For comparison — it handled requests slower than an equivalent Digital Ocean VM costing $10 a month (with 1GB of memory). But it’s very surprising that the difference was so small.

Having Express run Ember on every request to Lambda is actually really fast. The server is stored in memory for a while before it’s automatically destroyed by AWS or until some traffic is moved to another instance of the server when the load increases, which is also automatic.

The biggest performance bottleneck compared to regular VMs are cold starts. That’s when AWS spins up another Lambda instance with Express, which is a Linux VM underneath, to serve requests. Any request transferred to a new and cold instance is handled slowly, about a few seconds longer, due to the added bootup time. This may be a deal breaker for many websites. Some ways to minimize the effect are presented below.

Service Workers

Catching content with a Service Worker, which is a new JavaScript API.

A Service Worker can render a website almost instantly but beware of using a caching strategy that’ll reuse old content for too long.

Assets Relocation

Avoiding serving assets through the Lambda function. All static content should be served using a CDN or cloud storage, for example Amazon S3. Streaming large local or cloud media through the Lambda function is very slow due to small transfer speeds. It can also potentially cause AWS to create extra instances of Express, because many assets are requested by browsers in parallel. It’s possible to either rewrite all URLs on the website to point to a CDN or cloud storage or do 301, 302 or 307 redirects to it by appropriately configuring Express. The latter solution doesn’t affect Ember at all, because requests for media can be redirected using another app.get() command added to Express before they even reach Ember:

app.get('/assets/*', (req, res) => {

res.redirect(301, 'https://s3-eu-west-1.amazonaws.com/bucket' + req.path)

})

For some reason, though, the S3 bucket manager website would not allow some files with hashed filenames to be uploaded. Only after their names were changed to something simpler and changed back on S3 after upload could they be served. It’s a minor issue, probably related to JavaScript on the S3 bucket manager website, but worth mentioning. Fortunately, hashes remain the same for unchanged files between Ember builds.

After an asset is loaded for the first time, the browser caches it and loading is much faster. A 301 or 307 code will add extra hits to Express to check if the redirect is still in effect. These will be fast calls, but avoidable. Responding with a permanent redirect HTTP code — 301 — is generally better, because the browser will avoid asking Express about assets again. And since their names are hashed, cache busting later is possible. But using a library such as s3-proxy isn’t enough. Even though it allows resources to reside on S3, they will be streamed entirely through Express and that improves nothing.

If assets are served from S3, a copy must reside on the server for server-side rendering (just the JavaScript). It’s important to note, that assets served directly from S3 which reference resources by absolute paths will break. The safest solution is to convert absolute paths to relative ones (as long as assets are kept in the same place relative to each other on S3 as they are inside Ember builds). It will cause assets to have correct references no matter if they’re downloaded from S3 or used by Ember to do some server-side rendering.

There may be a way of forcing FastBoot to retrieve assets from S3 to remove duplication. However, it was something outside the scope of the test.

No Cold Starts

Hitting the lambda function on a schedule to keep it hot and ready to accept new requests. The easiest approach to do it, is to configure AWS CloudWatch using AWS Console to make a regular request to the Lambda function. Apparently, Amazon closes idling functions after 45 minutes on average but this is not an official statement, so it can change over time or dynamically based on how much traffic AWS has to handle at a given time. After all, the auto provisioning logic is completely up to Amazon.

Better Source Code And In-memory Cache

Optimizing source code and caching. While this doesn’t deal with cold starts directly, it can reduce their number and reduce the overall time it takes to serve requests by any instance. Lambdas will finish running faster and be able to serve next calls sooner. One major thing to profile in search of optimizations is server-side rendering. For example, if the server has to wait for HTTP requests to finish before returning the content of the website, it may be a good idea to cache it and have the client browser download the newest version with an AJAX request instead.

Currently, the largest Lambda instance can have 1536MB of memory and a “proportionately” fast processor. Only memory is disclosed when choosing the instance size. Over 1.5GB of memory should be enough for most needs, especially because Lambdas scale horizontally. While running a database on lambda should technically be possible, no projects were yet found that would enable it. Databases must be exceptionally fast which is difficult to ensure on Lambda. But more support for databases can arrive in the future.

Conclusion

With all the code, API Gateway settings, assets and Lambdas in place, the server was ready and the test finished. Virtually everything worked and was easy to setup. In fact, the results were better than expected.

Express wasn’t run on thousands of instances, just a few but it can at any point and AWS will take care of it automatically. Plus, usage and costs statistics are readily available in the AWS Console so it’s easy to do some general profiling of Lambda and see how well it performs. All console.log() commands are recorded asynchronously to CloudWatch, so there’s no need for sending logs to another service outside of AWS and delaying Lambdas’ execution as a result.

There’s one thing in the Ember FastBoot documentation that seems correct only due to Lambdas’ cold starts. It’s written in the “AWS Lambda” section of the guide. Specifically, that “Lambda is not recommended for serving directly to users, due to unpredictable response times, but is a perfect fit for pre-rendering or serving to search crawlers.”.

First, response times are quite predictable with lambda. Second, it’s advised that search crawlers are treated like regular users and has been advised for a while now since HTML5 History API is commonplace and developers are advised to create websites with server-side rendering and graceful degradation in mind. Third, correctly discerning between human visitors and web crawlers is difficult and often impossible. Some web crawlers do provide extra information to the website that they’re not humans but often they do not and it makes sense that they don’t. Ultimately, web crawlers are supposed to aid users in finding the content they want by knowing what content websites provide and where. And serving different content to humans and crawlers is pointless Doing so doesn’t make implementing websites any easier for developers. Chances are, that the guide for FastBoot (not the one on GitHub) was written a while ago, when Lambda was less stable and complete and that’s why it’s so critical of it. The performed test produced some decent results.

There are some security considerations to keep in mind, too. By default, Lambdas have pretty weak restrictions in terms of the number of instances that can be provisioned at the same time. This can potentially lead to attacks and large bills. Rules can be applied to Lambda and other AWS services to decrease abuse. Lambdas are not visible on the Internet unless they’re made public by API Gateway. API Gateway is a powerful way to keep abusers in check.